Organizing information using LLMs

Summer 2023 — Website & Python project

Motivation

The term "language model" is a bit of a misnomer — as they currently exist, they're more like "language and knowledge models". For example, these models know that ripe bananas are yellow, which is a fact specific to knowledge of the world, not a fact necessary to understand or model language per se. Since that information is contained in the model weights, it must be doing something beyond modeling language alone.

Furthermore, language models are optimized to model their inputs as best as they can with the fixed resources (parameters) that they have. Therefore, they likely model their inputs with an efficient internal representation — any less efficient representation would be discarded in favor of a more efficient representation, because of how these models are optimized. (Waving our hands a bit here - the technical details of optimization don't guarantee better results, but in practice these models tend to get better throughout the training process).

Since language models have an efficient internal representation of knowledge, we should be able to extract that representation of knowledge and convert it into a user interface that allows a human to browse the model's efficient representation. In other words, when learning about a topic, we should be able to piggyback on language models' existing learnings to more quickly learn about the topic. If the language model identifies a cluster of information that all pertains to the same topic, then we should provide that cluster of information to the user, so that they need not spend time discovering that such a cluster exists. The ultimate goal is to optimize human learning of topics by using language models to provide the best layout of information for learning the topic. In other words, we can use language models to accelerate human learning.

There's was a wide gap in the market for information. On one end of the spectrum we have Google, which provides a breadth of information without going deep into topics and doesn't provide easy ways to browse information (I would argue that clicking on links is not an optimal user experience), and on the other end we have Wikipedia, which provides a lot less content but goes deep into the topics for which it has content.

Project Goal

My goal for this project was to build a website that uses LLMs to organize information into a clean and browsable user interface, in such a way that optimizes learning about new topics. This is partly inspired by the AMBOSS knowledge base which is exactly that but only for medical information, for doctors to use on-the-job to easily reference trusted content. I kept that site in mind when designing the algorithms and user experience for this project.

Approach 1: Exploring the limits of ChatGPT

In the vein of using language models to extract and organize information, my first approach was to use an out-of-the-box, state-of-the-art language model, for which ChatGPT was my primary choice. Keep in mind that my only access to this model was via an API, or via a web browser, so I had no access to the model internals, e.g. weights, activations, loss values. Thus, the act of figuring out how to organize information with ChatGPT was entirely a matter of prompting — what prompts can allow me to learn most efficiently about a topic, and how do I guarantee that the information is correct/not hallucinated?

Generating study questions

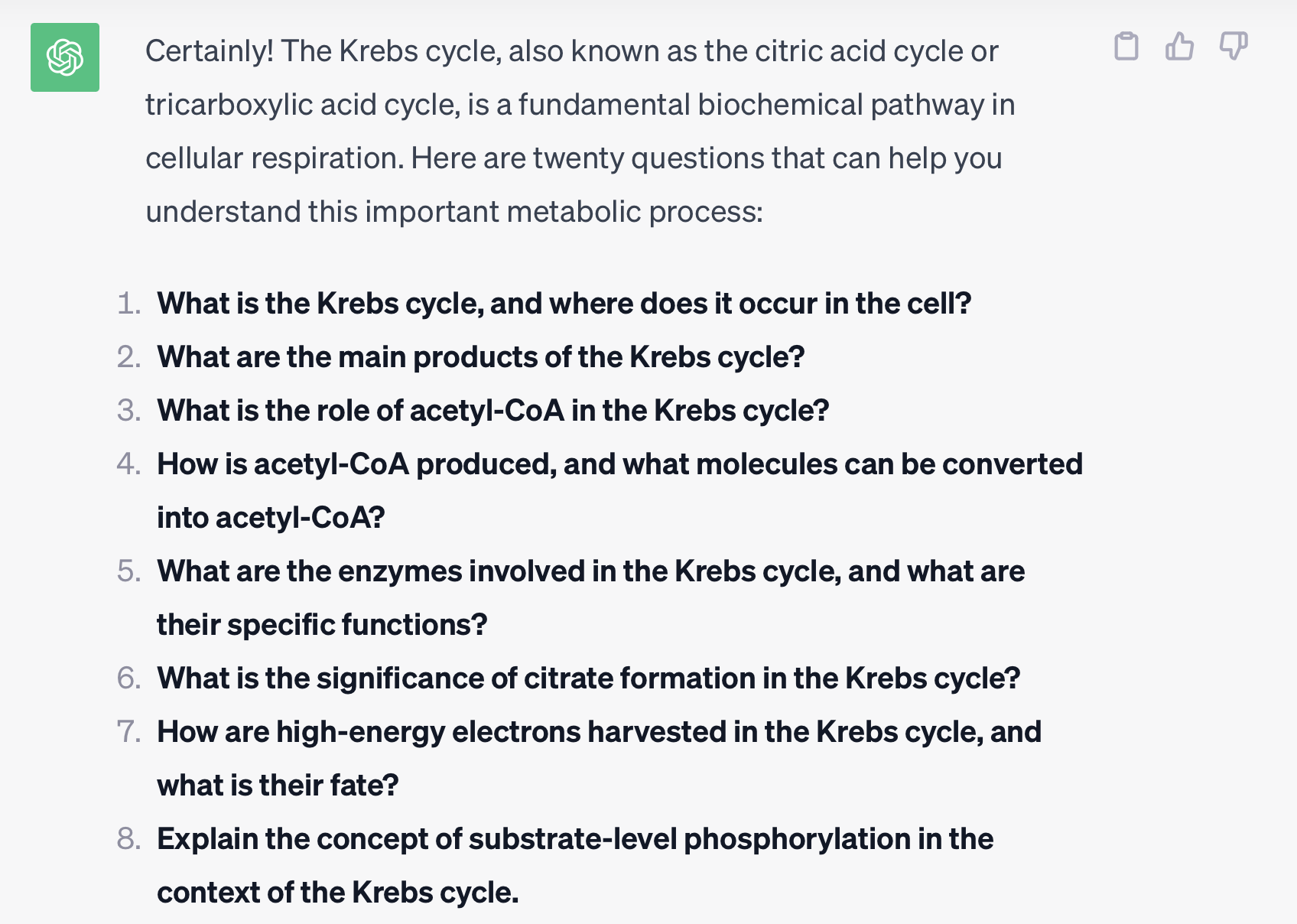

The very first experiment I ran was to give ChatGPT the prompt, "Give me a list of twenty questions that are most useful when learning about X," where I would replace X with a specific topic. See the example below for results about the Krebs cycle.

Why did I ask for output questions, instead of direct information? Since ChatGPT hallucinates information, we risk ChatGPT generating incorrect information about a topic. Instead, if we ask for study questions, in the worst case the questions is ill-posed, may be based on a false premise, or may give the user the wrong sense of a topic, but it never directly provides the user with incorrect information.

Right off the bat, we see reasonably good results for topics that are commonly encountered in school/university. This is expected, given that ChatGPT is best at summarizing topics that contain a lot of data in the training set. From a product perspective, we can package the results as a "study guide" for a topic. This poses two questions:

How do we select a set of topics to generate study guides?

How do we get answers to the questions?

Generating topic trees

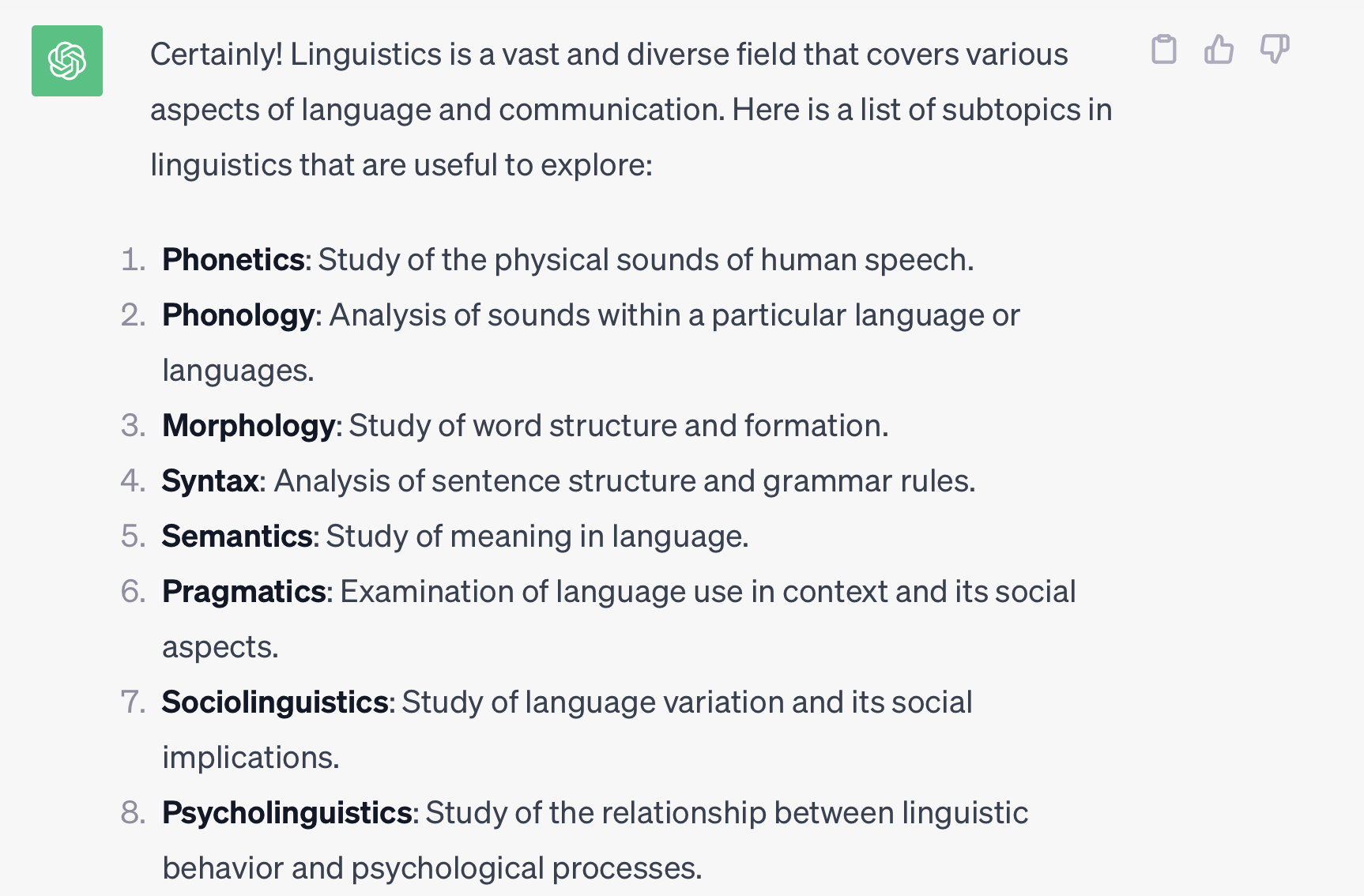

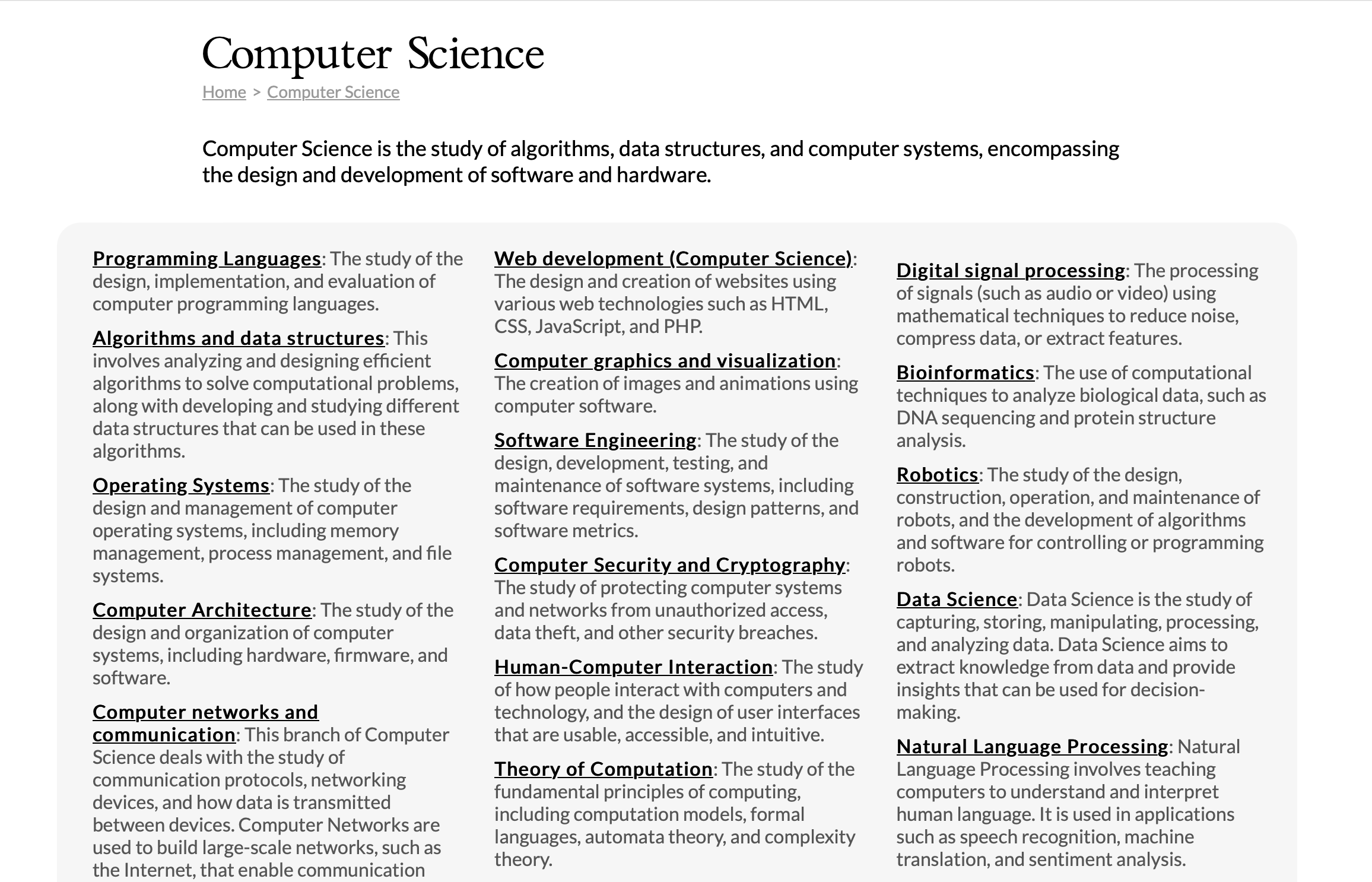

In terms of selecting a list of topics to generate for a website, I once again deferred to ChatGPT to give me the answer. I prompted it with, "Give me a list of topics commonly learned in school," and it returned a list of topics. Then, for each topic, I asked ChatGPT once again to generate me a list of topics by prompting it with, "Give me a list of subtopics that are most useful to learn about in the topic of X". See below for an example output.

I experimented with different prompts to generate subtopics, including, "Give me a list of all subfields of the field of X", and "Give me a list of all types of X," but ultimately I found that using the word "subtopic" and emphasizing that the prompt is for learning ("...most useful to learn about...") gave the best results.

Once we get a list of subtopics, we can repeat the process of finding subtopics by feeding in the subtopic back into ChatGPT, and having it return subtopics of the subtopics. This process generates a tree of topics, but ultimately after a few levels of topic unwrapping we start to see ChatGPT increasingly unable to generate subtopics for topics (the tree of human knowledge is indeed finite!). We also started to see repeat topics in different parts of the tree. For the purposes of a first draft of the website, this method was good enough, and I manually limited the topic unwrapping process to about four or so levels. Check out zilwiki.com for a demo of the tree of topics.

Getting answers to study questions

The last part of this demo site that I wanted to get up and running was to actually provide answers to the study questions that ChatGPT provided, and I wanted to make sure that the answers referenced trusted sources (i.e. input documents) instead of relying solely on ChatGPT-generated text. This effort proved to be tricky, since for each question we would need to somehow search a corpus for snippets of text that may contain the answers. This may require many passes of reference text through ChatGPT to determine whether a reference snippet answers a question, and still ultimately may be prone to ChatGPT hallucinations.

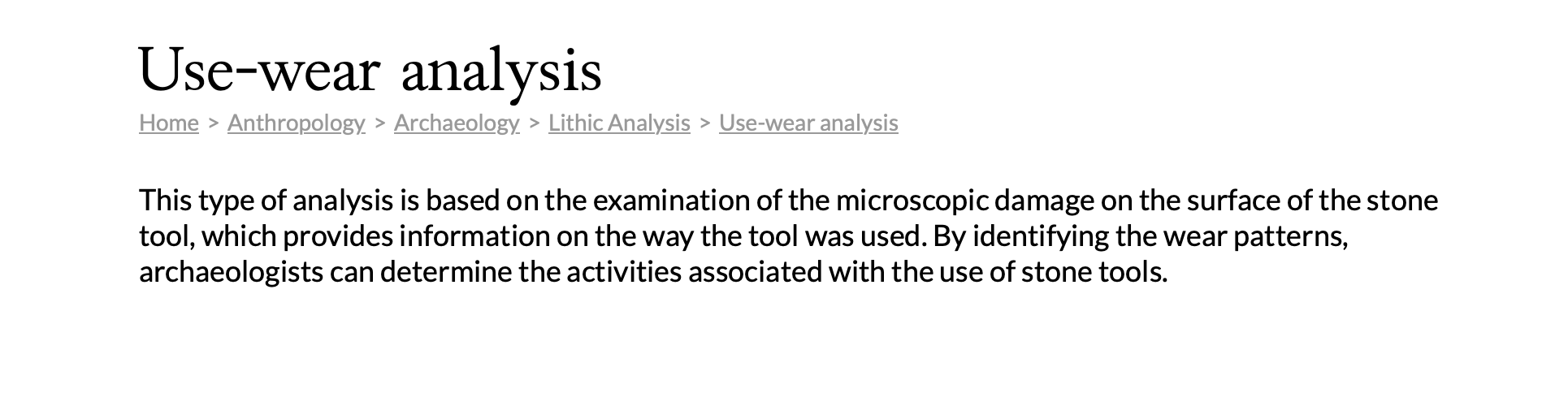

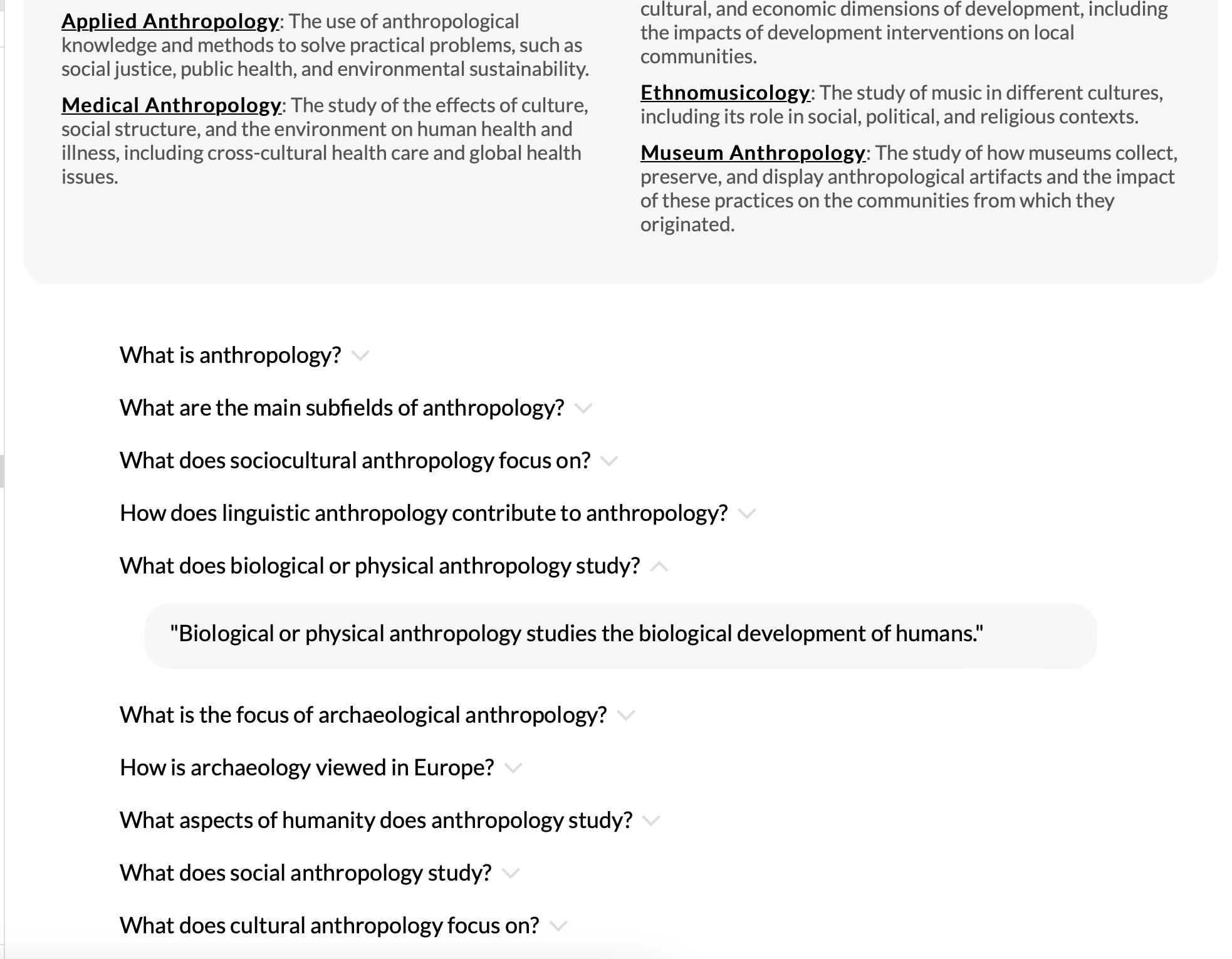

I opted for a different approach in order to limit the scope/difficulty of the problem. I decided that instead of generating study questions and searching reference text for answers, I can generate study questions from reference text. Once again, checkout zilwiki.com for a demo. My approach was as follows:

Determine the Wikipedia page for a topic, if it exists. To do this, we can ask ChatGPT to give a link to the Wikipedia page for a topic. While a bit hacky, it's good enough for our purposes. This is definitely an area of improvement for this algorithm, however.

Load the Wikipedia page summary using the Python Wikipedia API.

Use ChatGPT to generate questions from the Wikipedia page summary, simultaneously providing answers to the questions using the reference summary. The prompt here explicity asked for quotes from the input text that answered each question. This mostly avoids the problem of hallucination, and provides the user with a reference so they can check their answers (similar to the "People also ask" feature on Google).

Drawbacks of using ChatGPT

There were a few drawbacks of using ChatGPT to generate content for the site:

ChatGPT is only good at dealing with information that has a lot of training data. Once the topics became more niche, ChatGPT had more trouble generating subtopics and study questions, and in general the availability of Wikipedia pages for those topics was also pretty low.

ChatGPT still hallucinates even when given reference text. Ultimately, the problem of hallucination wasn't entirely solved by feeding in reference text, since sometimes ChatGPT would use the reference text incorrectly. Performance was much better than without using reference text, however.

This approach is expensive at scale. Even with $2 per one million tokens using gpt-3.5-turbo, this approach can get expensive when we're dealing with many topics. Since we're dealing with topics that grow exponentially in size every time we unwrap topics, we can quickly get to a scale that becomes infeasible at a fixed budget.

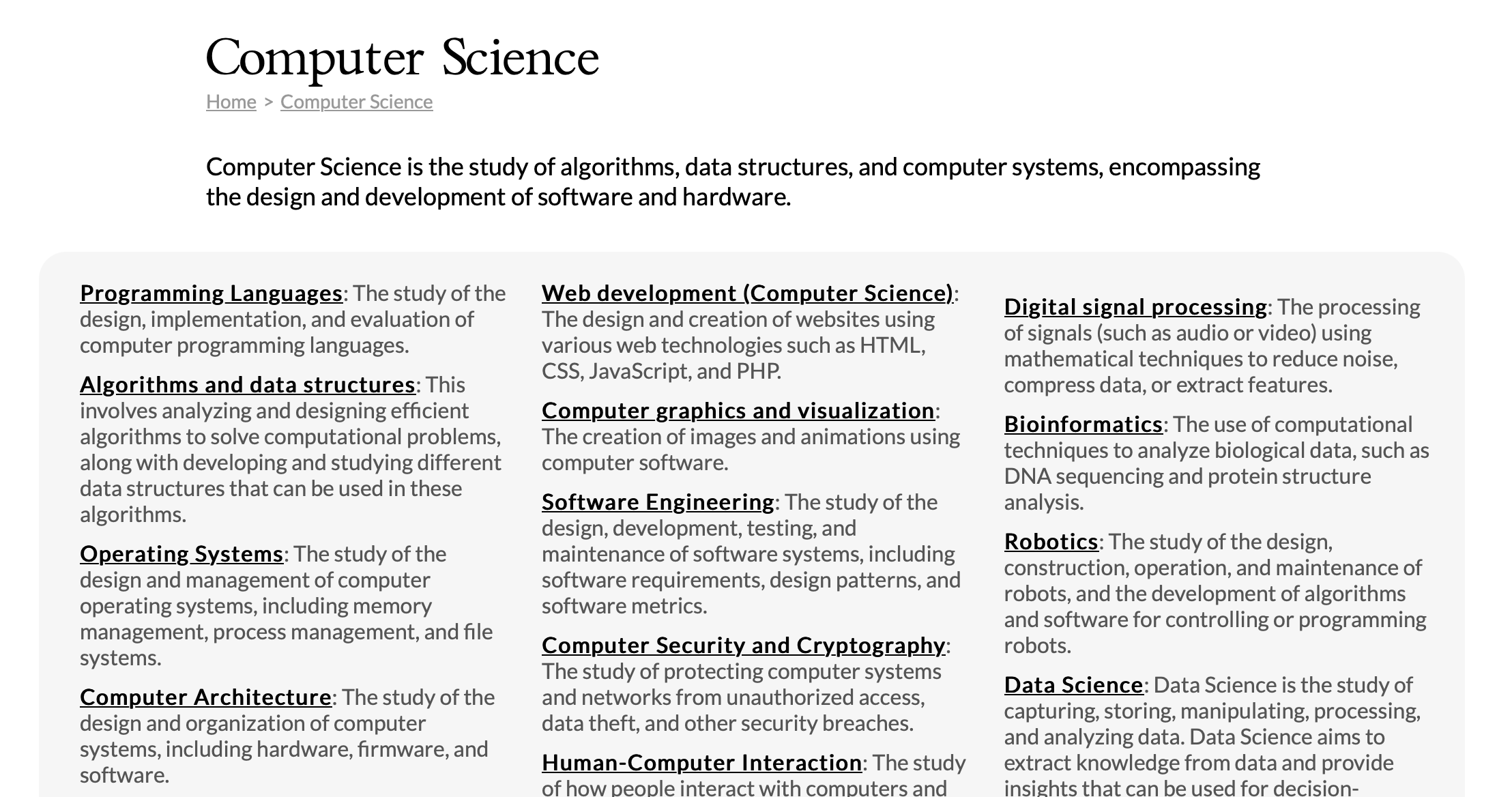

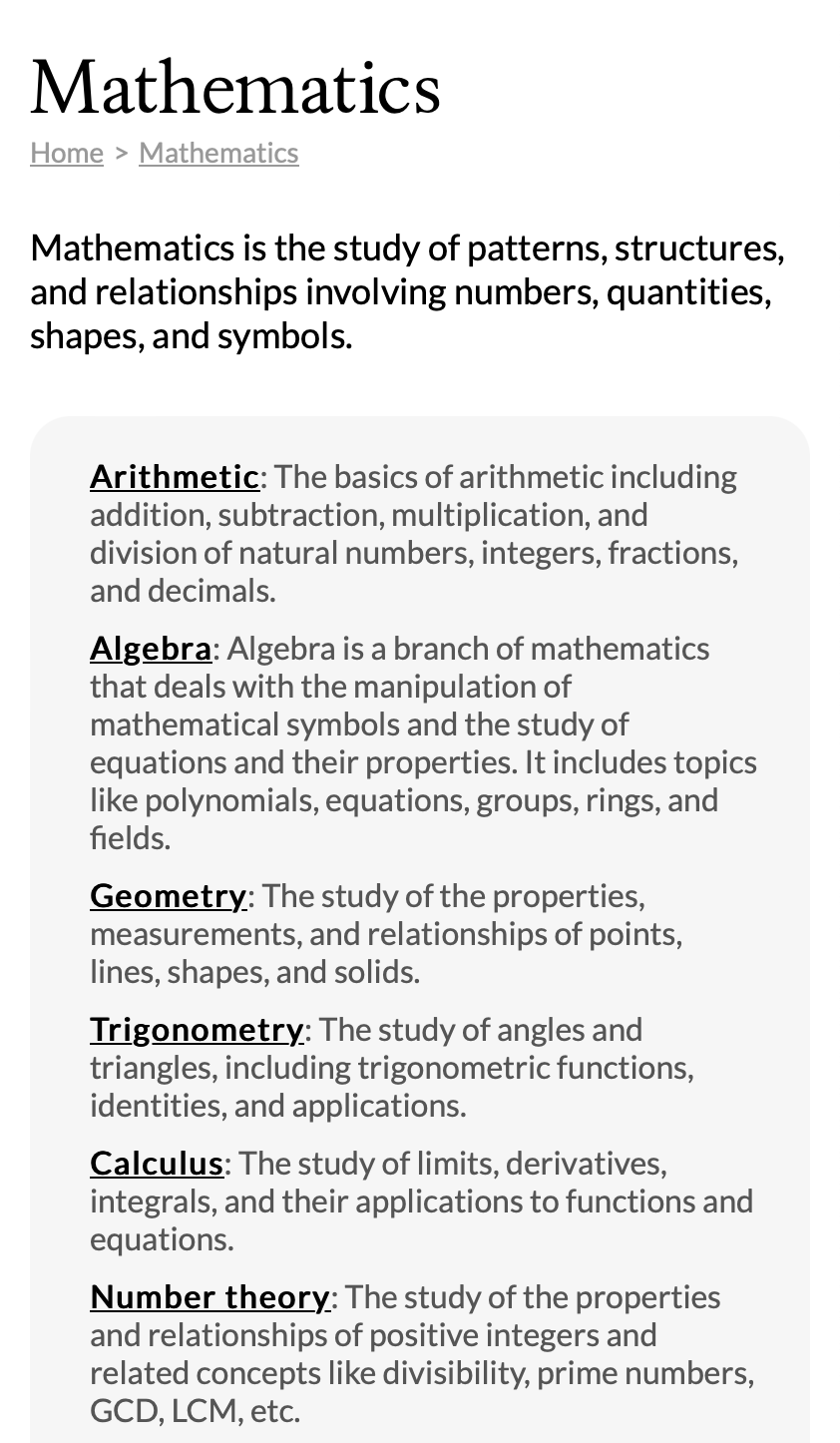

Demo website screenshots

The following are some screenshots from the final demo website.

Approach 2: Syntax parse trees for knowledge graph generation

In the previous approach, I used ChatGPT to organize information about topics. This wasn't what ChatGPT was explicitly designed for, but it's a capability inherent in language models. In this approach, I set out to design a language model that would make it easier to extract the underlying structure of knowledge, and ideally do so more cost-effectively.

My plan for designing a model that a more transparent representation of knowledge, was to first create a knowledge graph of the underlying dataset, then model the knowledge graph. Each entity in the dataset would be a node, and each piece of information would be represented by an edge in the graph. Then, the model would be merely a matter of graph modeling, i.e. predicting edges in the graph, and we could rely on existing methods for constructing graph embeddings to create embeddings for entities.

Benefits of this approach

This approach would have a few benefits:

First, we would already know what's a "thing" in our model, because each "thing" is a node. This would make the problem of topic discovery much easier.

Second, each entity will have it's own embedding, and we would be able to see very easily which pieces of information (edge) fit better into the entity's model/embedding. The lower the edge's loss given a node's embedding, the more "central" the piece of information is to the model, and the higher we will rank that information when browsing data about the entity.

Third, we should be able to create topics and subtopics from edges in the graph, because clusters should naturally form in the entity embedding space. Each cluster can be a topic, and the clusters should have sub-clusters which can be the subtopics.

Each of these benefits highlights one part of the overall goal of this design: if we design a language model that allows us access to internal representations of knowledge, then we should be able to very easily convert those representations of knowledge into a browsable user interface. This is evindent because each of the aspects of the website (a list of topics, information ranking within a topic, subtopic discovery) are easily queried in this language model (outputting the list of nodes, looking at the loss of each edge connected to a node, and clustering nodes).

Constructing the knowledge graph

Recall, this approach is to 1) construct a knowledge graph from the input dataset, then 2) model the graph. How do we plan on constructing such a knowledge graph?

Right off the bat, I was wary of using existing knowledge graph datasets, or knowledge graph extraction algorithms, because they tend to lose a lot of information about entities in the process. I wanted to construct a knowledge graph that contained all the information captured in text, so I experimented with designing my own, more information-dense knowledge graphs.

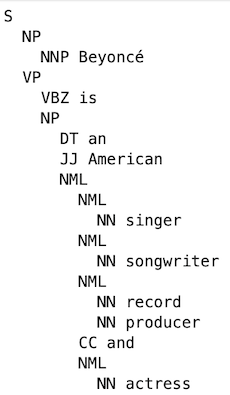

I started with hand-writing knowledge graphs from reference text, to get a sense of what the knowledge graphs look like, and to get a sense of how I might go about automatically constructing them from text. I found that the structure of the resulting knowledge graphs were practically equivalent to syntax parse trees of the reference sentences, albeit with a few nodes shuffled around. I realized I could effectively create the knowledge graphs deterministically from the syntax parse trees. Additionally, in order to fill in missing pieces of the input sentences, I needed to also perform coreference resolution, entity recognition/disambiguation, and word sense disambiguation. Between those three tasks, plus syntax parsing, I would be able to construct knowledge graphs from the input sentence. For those tasks, I used out-of-the-box solutions I found online based on BERT architectures.

Modeling the knowledge graph

Once we had the knowledge graph available, we could proceed with creating embeddings for the nodes in the knowledge graph. For this approach, I adapted the word2vec model for the knowledge graph. For each node in the knowledge graph, I trained its embedding to predict the embeddings of the edges around it.

Drawbacks of this approach

The main drawback of this approach was that it was difficult to train at scale since the number of embeddings in the knowledge graph is high — I needed a lot of VRAM to fit them onto my GPU simultaneously. I toyed around with different methods for generating embeddings on the fly for nodes that only have a few edges, in order to save VRAM, but still the overall required computation was very high. This could be mitigated by training on different parts of the knowledge graph on different machines, but because all parts of the knowledge graph use the same base set of words, we still need to share weights for the underlying word embeddings. Even when I trained the model on a smaller toy dataset to verify assumptions more quickly, the results weren't very good because the model needs a lot of data in order to capture meaningful correlations not immediately present in the training dataset.

Additionally, embeddings need to be very large to capture all the correlations in the dataset - I tested with reasonably small embedding size (~300) but even that was insufficient to get results that I wanted.

Ultimately, I learned that the bitter lesson still holds! I came to think of this approach as attempting to unbundle ChatGPT into models that each perform a slightly different step of the language modeling process. This approach has the benefit of making the internals of the language model more interpretable. The problem was that I still needed a lot of computation in order to model knowledge at a similar level as LLMs — no amount of unbundling of data would change that. I effecively re-learned the bitter lesson in the process of developing this approach. Ultimately whichever way it's done, modeling a dataset is modeling a dataset. All models achieving the same performance on the test set will be capturing the same patterns, albeit maybe in roughly different ways. Maybe some models can be used more easily in ways that are harder with other models, but I think every model architecture can be adapted to perform the same task.

A final note for this approach: in the introduction to this project I mentioned that existing language models model both language and knowledge. In this approach, I attempted to separate modeling language and knowledge, and had separate steps for each kind of modeling. The problem I ran into at the end, was that when it came to modeling knowledge, I needed a lot of computation to get good results. This makes me think that most of the computation/modeling of LLMs is in modeling knowledge. This is also evident in the fact that smaller models achieve good results at intermediate language tasks like syntax parsing, coreference resolution, etc, so the value added from LLMs is the ability to model knowledge. In other words, the modeling knowledge requires so much more computation than modeling language, because we only see success at this task when we use data- & compute-hungry LLMs.

Approach 3: Ranking information about a topic

In this approach, I returned to using a transformer architecture as the foundational model, and I focused on using those models solely for the task of ranking information about a topic. This method is still in development — write-up coming soon!

A closing thought on the endgame of artificial intelligence

What's the ultimate goal of AI? One intermediate goal, as explored in this project, is to improve access to knowledge. Another intermediate goal, as shown by ChatGPT's capabilities, is to use existing knowledge to make predictions about the world, and to automate tasks. My guess is that the ultimate goal of AI is to automate the discovery of knowledge, both by automating experimentation and by automating analysis of the experiements.

If AI has the ability to automate the discovery of knowledge, then part of its available resources can be used to discover how to make itself more efficient/cost-effective, improving itself over time. This is, in fact, the entire idea behind "the singularity". The rest of its available resources can be devoted towards expanding other areas of science and technology. However, this AI would be constrained by the physical world, so the amount of progress it could make is ultimately unknown.

From a technological point of view, this is a compelling idea. From a human standpoint, technology is only part of the human experience, and it's important to not set aside other, more important parts of life in pursuit of technological progress.