Converting LIDAR to sound

Fall 2021 — Arduino project

A while back I came across some research that demonstrated that the brain will rewire in the presence of new sensory input. In particular, some researchers converted visual input to electrical signals on the tongue, as a kind of visual prosthesis.

One day I became curious: can I train myself to see objects with my ears? Just like bats with sonar, I wanted to see whether I could train myself to visualize surfaces using sound alone. My plan was as follows: use a lidar sensor to detect distances to nearby surfaces and objects, and convert the distance values to sound using a speaker connected to the sensor. Then, by waving the sensor around with my eyes closed, I could train myself to visualize a surface based on where the sensor was pointing, what the output pitch of the device was, and how the pitch changed when I moved the sensor slightly to the side. As a long time fan of spatial awareness puzzles, this idea excited me.

I first tested this idea in an iOS app, using the Lidar sensor on my iPhone and outputting the results as a sound in the app. Ultimately, the latency within the app was so bad that the app was barely usable.

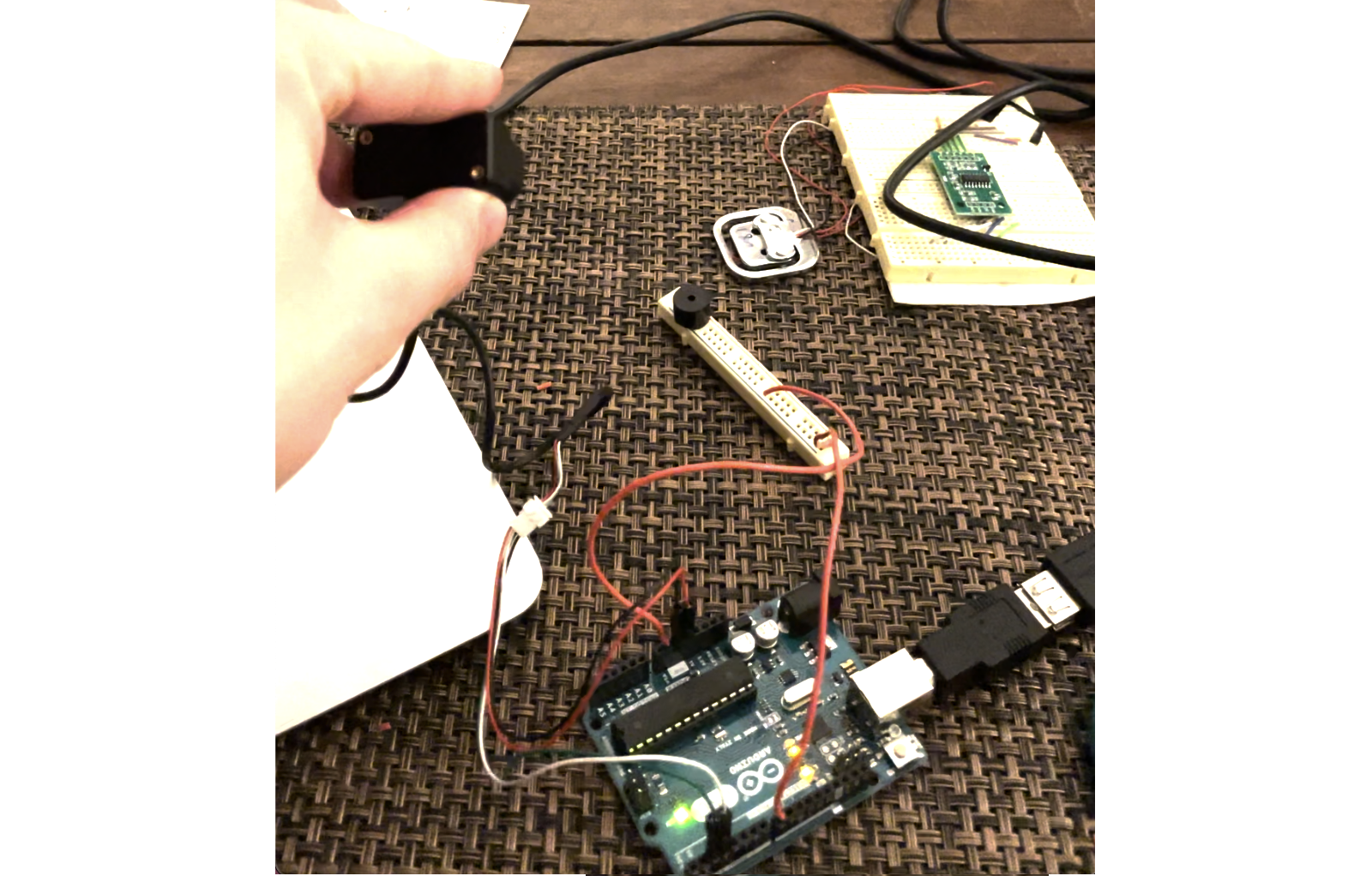

My second attempt at this idea was to make the device using an Arduino board, with a Lidar sensor I found online and a small speaker connected to the Arduino. The speaker took as input a voltage, and outputted a pitch from that voltage, so I quickly hacked together an Arduino script that read the Lidar sensor and outputted the distance value as a voltage on a pin connected to the speaker. The result was an admittedly annoying-sounding but 100% functional device. Check out the demo video below — make sure to turn on sound!

Drawbacks

In order to make the device useable in a real-world setting, the latency between the lidar sensor and the output speaker would need to be significantly lower than that of the Arduino. From testing the device, I found that it was easiest to visualize surfaces when I was moving the device back and forth - the repeated change in pitch would tell me whether the surface is angled, and by how much. The slower the response from the device, the harder it would be to visualize the surface based on sound. While the Arduino board had a reasonably good response time, the response time wasn’t great and didn’t allow for rapid changes in the sensor’s direction.