LIDAR in virtual reality with SLAM

Spring 2019 — Unity & ROS project

This project was my senior capstone project through the Topics in Collaborative Robotics course at Brown University. The goal of the project was to design a user interface for controlling robots through virtual reality, as a step towards full robot teleoperation. In particular, I explored a method for visualizing a robot’s environment in virtual reality using a Lidar sensor.

The main challenge I addressed was how to update the environment as the robot moved around a scene. Some folks at Brown’s robotics lab had previously worked with teleoperation in VR but kept the robot fixed in VR even if it moved about in real life. This had the effect of shifting the environment around the user whenever the robot moved, and felt pretty clunky especially when the robot rotated in space. My goal for the project was simple: whenever the robot moved in real life, I wanted the robot to move in VR while keeping the rendered environment fixed in VR. This presents a challenge: if the robot’s sensors are attached to it, then how can we know how exactly the robot moved relative to its environment?

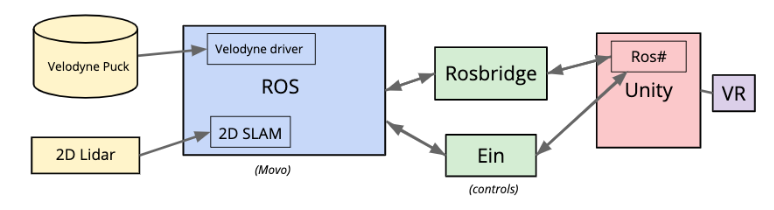

My solution was to use a SLAM (simultaneous localization and mapping) package on the robot to dynamically update the point cloud & simulated robot rendered in VR so that the environment felt like it was fixed in VR space. The SLAM module outputted the robot’s change in position relative to it’s perceived environment via sensors at its base. Using those values we can transform the point cloud in VR space accordingly. This project was a good exercise in integrating a number of software & hardward components into a single end-to-end stream of data. A diagram of the full software stack is shown below.

Here’s a link to the full write up of the capstone project. Two demo videos are shown below. The first demonstrates the Lidar point cloud rendered in VR, and the second shows the final demo with the robot and the point cloud updated based on the robot’s motion in VR.

One challenge that arose was the matter of software unreliability: throughout the course of the project, certain parts of the stack would break or exhibit strange bugs which would extend development time. Furthermore, since the data was fed through ROS and Unity, the final point cloud lagged behind real time by about 45 seconds, which is unusable for real world applications. If I were to continue this project, I would consider bypassing ROS and Unity entirely and send the Lidar data straight to the VR headset’s host machine, and I would render the point cloud using lower level VR libraries.